The DMA’s recent claim (that the 23% dip in marketing effectiveness is due to a failure to promote a coherent approach to measurement), assumes a causal link between the number of metrics reported (170 different metrics across 852 individual campaigns) and the overall success of the campaigns.

The research is the second iteration of the DMA’s Intelligent marketing Databank, the first of which was released in 2021, taking entries to the DMA awards, much in the same way that the IPA does.

The DMA’s paper concludes that the strong performance at the start of the pandemic, compared to a 23% decline in the late pandemic period, was due to a lack of focus on performance, brand, and business metrics, and lays the blame at the feet of what they term ‘vanity metrics.’

Below are some examples of the effects within each category.

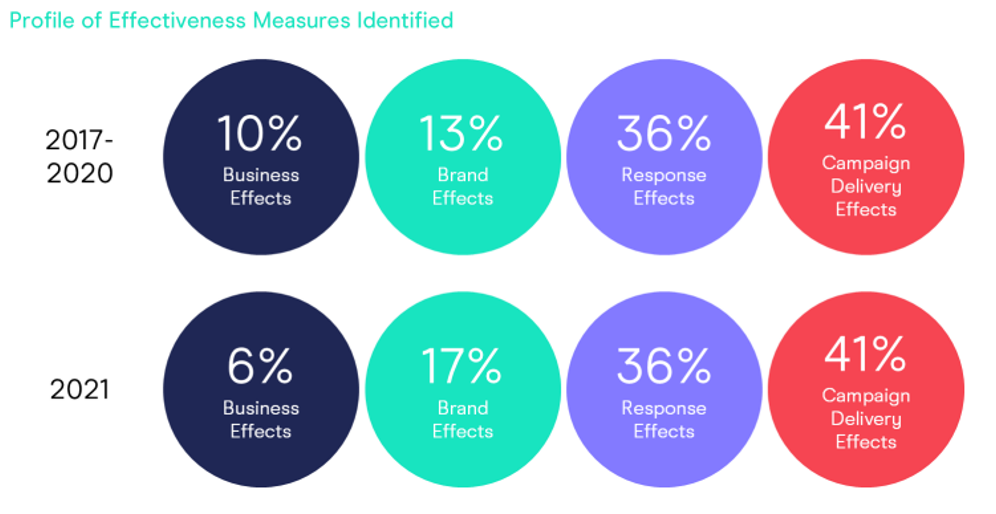

To argue a causal link, they would have to show a significant shift in the inclusion of each of the categories in the entries. This data is outlined below.

At absolute best, they could argue a slight change in business effects and brand effects (I’d like to see 2017-2020 split out by year as well). But assuming that the delivery metrics are at fault for a 23% drop in effectiveness is a bit of a stretch.

All Response Media viewpoint

Here’s what I think. At the start of the pandemic, people went bonkers for buying stuff. TV advertising was super cheap and people were sat at home bored wanting to spend the cash that they weren’t spending in the pub and on transport and kids’ clubs or filling their houses with stuff they probably didn’t need.

That’s a much more likely reason for an increase in effectiveness at the early stages of the pandemic; for many categories, it was like shooting fish in a barrel.

The other problem with this is that by criticising the campaign delivery effects, and calling them ‘vanity metrics’, they’re clashing swords with the dark lord of penetration*, Byron Sharp. If you’ve read ‘How Brands Grow’, you’ll know that metrics like reach and frequency of all category buyers is super important when it comes to winning market share. Particularly light category buyers. So these metrics should definitely be included.

They’re also super easy to measure and therefore include on award entries, which is probably why they’re included in so many. It beefs up the entry and makes it look more scientific.

In short, the range of metrics doesn’t matter at all. What does matter, is that you select metrics which are aligned to the commercial success of your business.

If you know that increasing earned media value correlates with increasing monthly sales, then go for it. If a CPC reduction and increase in interest lift makes you loads of money, then go for it. Metrics should be specific to a business, which is why there are so many, and they should be linked to commercial success, proven through regression analysis, or MMM.

*Credit: Mark Ritson

Ed Feast

Director of Planning

Veronika Hulikova, Senior Econometrician, provides insight into measurement at ARM:

TV campaign measurement can range from methods using granular data at a second level through to weekly/monthly level data used more commonly with Marketing Mix Models. This allows for client teams to optimise campaigns using different variables, such as day part and spot length, through ARMalytics. This approach is also best used when addressing direct response TV campaigns with reliable KPIs such as New Visitors or Sales driven by TV campaigns.

To answer wider questions, we apply econometric methods that look at a more holistic overall effect to the same KPIs. This approach allows us to capture wider effects of campaigns that are not visible at such a granular level.

Using the same KPIs across all analysis gives us the ability to compare the effectiveness of multiple TV points. Starting from a granular level immediately after a spot, then at a daily level for the duration of the whole campaign, to even understanding the changes that occur over years.

Innovation is key when addressing media measurement as we are constantly able to gather more data and use new machine learning methods. The effectiveness of measurement ultimately depends on choosing the right methods for the questions that are being asked as well as using reliable KPIs that come from a clean dataset.

Veronika Hulikova

Senior Econometrician

FEATURED READS